DeepSeek-R1-Lite-Preview: Advancing Transparent AI Reasoning

DeepSeek has unveiled its latest AI model, DeepSeek-R1-Lite-Preview, marking a significant advancement in transparent AI reasoning. The model matches or exceeds OpenAI’s o1-preview-level performance on key benchmarks while offering real-time visibility into its thought processes. This innovation addresses critical shortcomings in current AI models, particularly in complex reasoning tasks and transparency. Despite its strengths, the model faces challenges with certain logic problems and censorship issues. DeepSeek plans to release open-source versions and APIs, potentially reshaping the AI landscape.

Introduction

DeepSeek, an AI offshoot of Chinese quantitative hedge fund High-Flyer Capital Management, has made a significant leap forward with the release of DeepSeek-R1-Lite-Preview ¹. This new model represents a focused effort to address critical challenges in AI reasoning and transparency, areas where even advanced language models have struggled to make substantial progress.

Notably, it is the first follow-up reasoning model after OpenAI’s introduction of the o1-preview and o1-mini models, as well as the first Chinese reasoning model of its kind. This positioning highlights DeepSeek’s innovative approach and its potential impact on the global AI landscape.

Model Capabilities and Performance

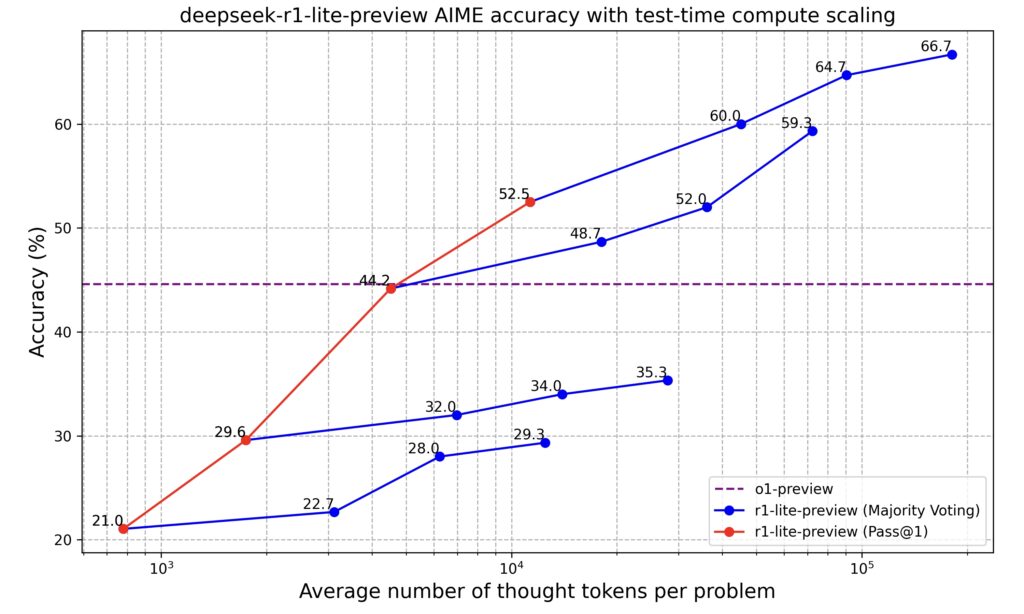

DeepSeek-R1-Lite-Preview has demonstrated impressive capabilities in complex reasoning tasks, particularly in mathematical reasoning and real-time problem-solving. According to DeepSeek, the model “matches or exceeds OpenAI’s o1-preview-level performance on AIME (American Invitational Mathematics Examination) and MATH benchmarks.” This achievement positions DeepSeek-R1-Lite-Preview as a strong contender in the field of advanced AI models.

A key feature of the model is its ability to show steady score improvements on benchmarks like AIME as thought length increases. This characteristic indicates better performance with longer reasoning time, highlighting the model’s proficiency in tackling complex problems that require extended cognitive processes.

Transparency and Chain-of-Thought Reasoning

Perhaps the most notable aspect of DeepSeek-R1-Lite-Preview is its transparent thought process, which is visible to users in real-time. This is very different from OpenAI’s o1-preview and o1-mini models, which never show the internal thought processes and only inform the user in a summary about their thinking. DeepSeek-R1-Lite-Preview, on the other hand, allows users to observe the model’s reasoning in real-time, providing a level of transparency that is currently unmatched in the industry.

The model incorporates Chain-of-Thought (CoT) reasoning, allowing observers to follow the logical steps taken to reach a solution. This feature addresses a common limitation in current AI models: the lack of transparency in decision-making processes.

As noted, “Users can observe the model’s logical steps in real time, adding an element of accountability and trust that many proprietary AI systems lack.” ² This transparency enhances user trust and understanding of the AI’s decision-making process, making it particularly valuable for educational applications and research.

Availability and Future Plans

Currently, DeepSeek-R1-Lite-Preview is accessible through the DeepSeek Chat web interface. Users can experience the model’s capabilities, with a generous daily limit of 50 free messages for the advanced “Deep Think” mode.

Looking ahead, DeepSeek has announced plans to open-source the model. This move aligns with the company’s tradition of supporting the open-source AI community and could potentially accelerate advancements in the field by allowing broader access to cutting-edge AI technology.

Pricing and Accessibility

While DeepSeek has not yet announced official API pricing for the R1-Lite model, it’s anticipated that it will follow the company’s trend of offering more competitive rates compared to industry giants like OpenAI. For context, OpenAI’s o1-preview model is priced at $15 per million tokens for input and a substantial $60 per million tokens for output. This pricing structure can be prohibitively expensive for many developers and researchers, particularly for large-scale applications or extensive research projects. Additionally, the costs for the “thinking tokens” are cumulative, and it’s not feasible to accurately predict how many tokens will be used in advance, further complicating budget planning and cost management for users.

DeepSeek has consistently positioned its models as more cost-effective alternatives in the AI market. Previous DeepSeek models have been significantly cheaper than their competitors, often forcing other companies to lower their prices or offer free services to remain competitive. Given this history, it’s reasonable to expect that the R1-Lite model will also be priced more affordably, potentially at a fraction of OpenAI’s rates.

The anticipated lower costs of DeepSeek’s API could have far-reaching implications for the AI industry. More affordable access to advanced AI capabilities could democratize the use of these technologies, enabling a broader range of developers, researchers, and businesses to leverage powerful AI models in their work. This could lead to increased innovation and more diverse applications of AI across various sectors.

However, it’s important to note that until DeepSeek officially announces its API pricing for the R1-Lite model, these expectations remain speculative. The actual pricing will likely be a crucial factor in determining the model’s adoption rate and its impact on the competitive landscape of AI services.

Technical Aspects and Industry Context

The development of DeepSeek-R1-Lite-Preview highlights the emergence of new scaling laws in AI development, particularly in the area of inference scaling. The model’s improved accuracy with increased processing time showcases the potential of test-time compute, an approach that gives models extra processing time to complete tasks.

This shift in focus towards new approaches like test-time compute represents a paradigm shift. Microsoft CEO Satya Nadella’s commented on test-time compute during a keynote at Microsoft’s Ignite conference: “We are seeing the emergence of a new scaling law.” ³

Company Background and Impact

DeepSeek’s position as an AI offshoot of High-Flyer Capital Management, a Chinese quantitative hedge fund, provides interesting context to the development of DeepSeek-R1-Lite-Preview. The company has a history of releasing high-performance open-source AI technologies, with previous models forcing competitors to lower prices or offer free services.

One source mentions, “One of DeepSeek’s first models, a general-purpose text- and image-analyzing model called DeepSeek-V2, forced competitors like ByteDance, Baidu, and Alibaba to cut the usage prices for some of their models — and make others completely free.” ⁴ This track record suggests that the release of DeepSeek-R1-Lite-Preview could have similar implications for the AI market and competition among major players.

Limitations and Concerns

Despite its advanced capabilities, DeepSeek-R1-Lite-Preview is not without limitations. The model has been reported to struggle with certain logic problems, such as tic-tac-toe. Additionally, it can be jailbroken, potentially bypassing safeguards and producing inappropriate content. ⁵

A significant concern is the issue of censorship. The model blocks politically sensitive queries related to Chinese leadership and events, likely due to government pressure and regulations on AI in China. As one source states, “Models in China must undergo benchmarking by China’s internet regulator to ensure their responses ’embody core socialist values.'” ⁴ This censorship raises questions about the limitations imposed on AI models developed in certain regions and their potential global applicability.

There are also concerns about transparency in the model’s development process. While the reasoning process is transparent, DeepSeek has not yet released the full code for independent third-party analysis or benchmarking. The company has also not published a technical paper explaining how DeepSeek-R1-Lite-Preview was trained or architected, leaving questions about its underlying origins unanswered.

Significance and Potential Applications

The transparent reasoning process of DeepSeek-R1-Lite-Preview has an enormous potential for educational applications. By providing insight into AI reasoning processes, the model could enhance understanding for students and researchers alike, offering valuable learning opportunities in fields requiring complex problem-solving.

The advancement in AI reasoning represented by DeepSeek-R1-Lite-Preview addresses critical shortcomings in current models, such as lack of transparency and limited reasoning capabilities. This progress could lead to more reliable and trustworthy AI systems across various domains.

For users, the model’s clear, step-by-step reasoning and improved accuracy in complex tasks could result in enhanced experiences across various applications, from problem-solving to research. The ability to follow the AI’s thought process could lead to better understanding and more effective collaboration between humans and AI systems.

Conclusion

DeepSeek-R1-Lite-Preview represents a huge step forward in AI technology, particularly in the areas of transparent reasoning and complex problem-solving. By matching or exceeding the performance of leading models while offering unprecedented insight into its decision-making process, DeepSeek has addressed key limitations in current AI systems.

However, the model’s limitations, including censorship issues and struggles with certain logic problems, highlight ongoing challenges in AI development. The planned release of open-source versions and APIs could further accelerate progress in the field, potentially reshaping the AI landscape.

Models like DeepSeek-R1-Lite-Preview that prioritize transparency and reasoning capabilities may play a crucial role in building trust and expanding the practical applications of AI across various domains. The impact of this development on education, research, and industry applications remains to be seen, but it undoubtedly marks a significant milestone in the pursuit of more capable and trustworthy AI systems.

Sources:

- https://x.com/deepseek_ai/status/1859200141355536422

- https://venturebeat.com/ai/deepseeks-first-reasoning-model-r1-lite-preview-turns-heads-beating-openai-o1-performance/

- https://www.youtube.com/watch?v=3YiB2OvK6sY

- https://techcrunch.com/2024/11/20/a-chinese-lab-has-released-a-model-to-rival-openais-o1/

- https://x.com/elder_plinius/status/1859270409406869985

[…] https://www.theaiobserver.com/deepseek-r1-lite-preview-advancing-transparent-ai-reasoning/ […]