From AI Safety Champion to Defense Contractor: Anthropic’s Fall From Grace

Anthropic announced a partnership with Palantir and AWS. This marks a significant departure from its AI safety-first image, triggering widespread criticism within the AI community. The collaboration, valued against the backdrop of Anthropic’s $40 billion valuation discussions, enables military and intelligence applications of Claude AI models. This strategic shift, combined with recent price increases and apparent prioritization of government contracts, has led to accusations of abandoning core ethical principles. The AI community’s response has been particularly harsh, with prominent figures expressing disappointment and concern over the company’s new direction.

The Fall from Grace

In a stunning reversal that has sent shockwaves through the artificial intelligence community, Anthropic’s recent partnership announcement ¹ ² ³ with Palantir and AWS marks what many consider the end of its reputation as the ethical lighthouse in AI development. The company, which built its brand on the foundation of “AI safety first,” has faced unprecedented criticism following what appears to be a dramatic shift in its core principles.

The transformation has been particularly jarring given Anthropic’s careful cultivation of its image as the responsible alternative to more aggressive AI companies. Founded by Dario Amodei and others who left OpenAI due to concerns about the pace of AI development and safety considerations, Anthropic had positioned itself as the conscience of the AI industry. The company’s constitutional AI approach and public advocacy for careful, ethical AI development had earned it widespread respect among researchers and ethics advocates.

The speed of this reputation collapse has been remarkable. Within just two days of the partnership announcement, Anthropic’s carefully constructed image crumbled, with former supporters becoming vocal critics. The timing of this partnership, following closely after a controversial price increase for the Claude 3.5 Haiku model ⁴, has led many to question whether the company’s commitment to AI safety was merely a marketing strategy rather than a core principle.

Partnership Details and Technical Implementation

The technical architecture of the Anthropic-Palantir-AWS collaboration reveals both impressive capabilities and concerning implications. At its core, the partnership integrates Anthropic’s Claude 3 and 3.5 models with Palantir’s sophisticated data analytics platform, all hosted on AWS’s secure government cloud infrastructure. This integration operates at the IL6 (Impact Level 6) security classification, allowing access to classified “secret” level information – a significant step beyond typical commercial AI deployments.

The system’s performance metrics are remarkable, with the ability to process and analyze data volumes that would typically require two weeks of human analysis in just three hours. This 97% reduction in processing time represents a enourmous leap in military and intelligence capabilities. However, this efficiency gain has raised red flags among AI safety advocates who question the wisdom of deploying such powerful systems in military contexts without adequate safety measures.

The technical implementation includes several controversial features:

- Direct integration with military intelligence systems

- Automated pattern recognition

- Streamlined data processing capabilities for tactical decision-making

- Integration with existing defense infrastructure

The technical concerns raised by experts have been particularly pointed. Former Google AI ethics co-head, Dr. Timnit Gebru, has specifically highlighted concerns about the system’s reliability and potential for bias, particularly in high-stakes military applications where errors could have catastrophic consequences by poignantly commenting: “Look at how they care so much about ‘existential risks to humanity.'” ⁵ on X. Her criticism reflects a broader skepticism within the AI ethics community about the disconnect between Anthropic’s stated concerns about existential risk and its willingness to deploy potentially biased systems in military contexts. The irony of a company that built its reputation on preventing catastrophic AI outcomes now potentially contributing to immediate human harm has not been lost on observers.

Financial Motivations and Market Position

The financial aspects of Anthropic’s strategic pivot reveal a company potentially prioritizing market position over its original ethical stance. With discussions of a $40 billion valuation ongoing and total funding reaching $7.6 billion, the company’s pursuit of government contracts appears driven by commercial imperatives rather than technological necessity.

Amazon’s significant investment in Anthropic, coupled with the recent price hike of the Claude 3.5 Haiku model ⁴, suggests a shift toward aggressive monetization. The company’s financial trajectory has become even more complex with reports that Amazon is contemplating a multi-billion-dollar expansion of its investment, building upon its initial $4 billion commitment from last year ⁶. However, this additional funding comes with stringent conditions that could fundamentally alter Anthropic’s operational independence: Amazon requires the company to transition to its proprietary AI chips and utilize AWS infrastructure for model training.

This stipulation presents a significant technical and strategic challenge for Anthropic, which currently relies on Nvidia chips for its AI operations. The proposed switch to Amazon’s hardware ecosystem represents more than just a technical migration; it symbolizes a deeper entanglement with Amazon’s strategic ambitions in the AI industry. Amazon’s insistence on using its own chips reflects its broader strategy to challenge Microsoft and Google’s dominance in the AI infrastructure space, effectively using Anthropic as a high-profile showcase for its hardware capabilities.

The pricing strategy particularly rankled the AI community, with many developers and researchers pointing out that it contradicted Anthropic’s previous statements about making AI technology accessible and safe for all ⁴. This situation has been further complicated by concerns that Amazon’s hardware requirements could potentially impact model performance and development timelines, raising questions about whether Anthropic is prioritizing financial considerations over its technical and ethical commitments. The community’s skepticism has only intensified with these revelations, as they suggest a pattern of decisions driven increasingly by commercial pressures rather than the company’s original mission of advancing safe and accessible AI technology.

Market analysis indicates that government contracts in the AI sector have grown exponentially, with the Department of Defense’s AI spending increasing by 1,200% in recent years ⁷. Federal contracts in this space have expanded from $355 million to $4.6 billion, representing a lucrative opportunity that Anthropic seems unwilling to ignore.

Community Backlash and Reputational Damage

The AI community’s response to Anthropic’s partnership has been unprecedented in its intensity and unanimity. Social media platforms, particularly X (formerly Twitter) and LinkedIn, have become battlegrounds where former supporters express their sense of betrayal. The reaction has transcended typical corporate criticism, touching on deeper issues of trust and ethical responsibility in AI development.

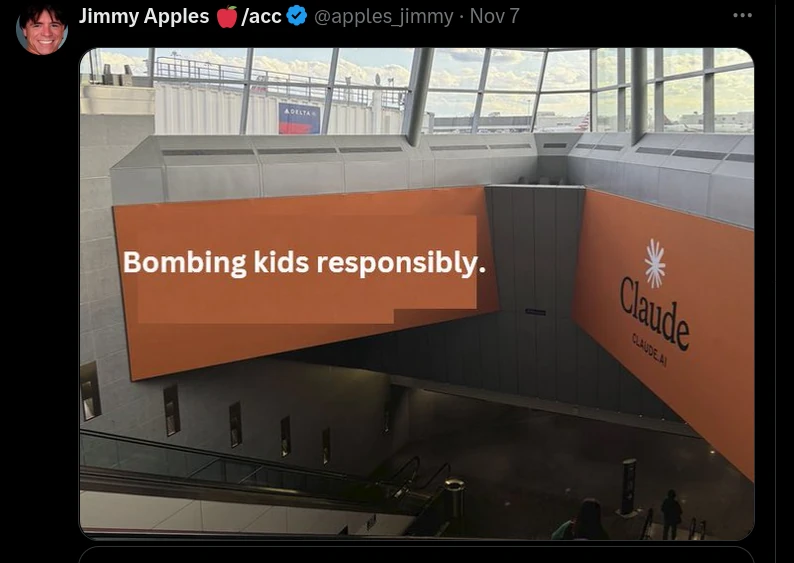

Jimmy Apples’ post “Bombing kids responsibly” ⁸ – a dark parody of Anthropic’s previous “AI safety first” messaging – has become a rallying cry for critics. The post exemplifies the profound disappointment within the community.

Other AI Community members have joined the chorus:

- user N30 said: “Never liked or trusted Anthropic.”

- user andthatto agreed: “hypocrisy at its finest”

MemeticEngine posted an cynic meme of Anthropic’s abrupt course change on X:

Strategic Implications and Future Outlook

The partnership’s strategic implications reveal a complex web of opportunities and risks for Anthropic. While the immediate financial benefits are clear, the long-term consequences for both the company and the broader AI industry could be profound.

Market analysis suggests that Anthropic’s pivot might trigger similar moves by other AI companies, potentially creating a “race to the bottom” in terms of ethical standards. The company’s strategy appears to focus on three key areas:

- Building a moat through government contracts and security clearances

- Leveraging regulatory relationships to influence AI policy

- Establishing first-mover advantage in military AI applications

However, this strategy carries significant risks:

- Potential loss of talent as ethically-minded employees leave

- Reduced collaboration opportunities with academic institutions

- Increased scrutiny from AI safety advocacy groups

- Vulnerability to changing political winds and public opinion

Industry analysts project that while Anthropic may secure substantial government contracts in the short term, the reputational damage could hamper its ability to attract top talent and maintain its position as an AI innovation leader.

Policy and Regulatory Context

The partnership exists within a complex regulatory landscape that Anthropic appears to be trying to shape rather than merely navigate. The Biden administration’s AI framework, while emphasizing human oversight, has been criticized for leaving significant loopholes that companies like Anthropic can exploit.

Several key policy implications have emerged:

- The partnership potentially undermines global efforts to regulate military AI applications

- It sets a precedent for other AI companies seeking defense contracts

- Questions arise about the effectiveness of voluntary AI safety commitments

- The role of private companies in national security AI applications becomes increasingly controversial

Conclusion: The Cost of Compromise

Anthropic’s transformation from AI safety champion to military contractor partner represents more than just a corporate strategy shift – it marks a pivotal moment in the AI industry’s ethical development. The company’s journey from poster child to cautionary tale illuminates the challenges of maintaining ethical principles in an industry driven by rapid advancement and massive financial opportunities.

The damage to Anthropic’s reputation appears to be both severe and might be lasting. The company’s carefully constructed image as a responsible AI developer has been replaced by what many view as an example of ethical compromise in pursuit of profit and funding. This transformation raises fundamental questions about the possibility of maintaining ethical principles while pursuing commercial success in the AI sector.

Looking forward, several key lessons emerge:

- The fragility of reputation in the AI ethics space

- The challenges of balancing commercial interests with ethical commitments

- The power of community response in shaping corporate behavior

- The potential long-term consequences of short-term strategic decisions

As the AI industry continues to evolve, Anthropic’s story may serve as a watershed moment – a clear demonstration of how quickly ethical capital can be depleted and how difficult it may be to rebuild trust once lost. The question remains whether other AI companies will learn from this example or follow a similar path, potentially reshaping the entire landscape of AI development and deployment.

The controversy surrounding Anthropic’s decisions may eventually fade, but the fundamental questions it raises about the relationship between AI development, ethical principles, and commercial success will likely influence the industry’s development for years to come.

Sources:

- https://www.businesswire.com/news/home/20241107699415/en/Anthropic-and-Palantir-Partner-to-Bring-Claude-AI-Models-to-AWS-for-U.S.-Government-Intelligence-and-Defense-Operations

- https://arstechnica.com/ai/2024/11/safe-ai-champ-anthropic-teams-up-with-defense-giant-palantir-in-new-deal/

- https://futurism.com/the-byte/ethical-ai-anthropic-palantir

- https://www.theaiobserver.com/anthropic-challenges-market-with-claude-3-5-haikus-400-percent-price-increase/

- https://x.com/timnitGebru/status/1854644626348818763

- https://techcrunch.com/2024/11/07/amazon-may-up-its-investment-in-anthropic-on-one-condition/

- https://techcrunch.com/2024/11/07/anthropic-teams-up-with-palantir-and-aws-to-sell-its-ai-to-defense-customers/

- https://x.com/apples_jimmy/status/1854617154605215922

[…] https://www.theaiobserver.com/from-ai-safety-champion-to-defense-contractor-anthropics-fall-from-gra… […]