Open-Source Revolution: Mochi 1 Brings Hollywood-Quality Video Generation to the Masses

Genmo has released Mochi 1, a revolutionary open-source text-to-video generator that’s making waves in the tech community. This powerful AI model boasts exceptional motion quality and prompt adherence, generating realistic videos from text descriptions. Initially requiring high-end hardware, community developments are making it more accessible. Within days of its release, Mochi 1 has already climbed to a top position in AI video generation rankings.

Mochi 1: Redefining AI-Generated Video

In a move that has surprised the tech industry and AI community, Genmo has released Mochi 1, an open-source text-to-video generator under the liberal Apache license. This AI model is setting new standards in video generation, with many expressing disbelief at its capabilities, especially given its open-source nature.

Mochi 1 represents a significant leap forward in AI-generated video technology. It offers unmatched motion quality and superior adherence to text prompts, producing videos that closely align with user descriptions.

Standout Features and Capabilities

- Lifelike Motion and Realistic Details: One of Mochi 1’s most impressive features is its ability to generate incredibly realistic motion. The model adheres to physical laws, ensuring that even small details in generated videos appear natural and lifelike. This level of motion quality is unprecedented in open-source text-to-video generators.

- Precise Control and Prompt Adherence: Mochi 1 gives users detailed control over the generated content. It allows for precise specification of characters, settings, and actions, with the resulting videos closely matching the provided text descriptions. This level of accuracy allows creators to bring their visions to life with remarkable fidelity.

- Bridging the Uncanny Valley: Perhaps one of Mochi 1’s most notable achievements is its ability to generate consistent and fluid human actions and expressions. This capability helps the model produce videos with human elements that feel natural and believable, addressing the “uncanny valley” effect often seen in AI-generated content.

Technical Innovations

Advanced Architecture and Model Size

Mochi 1 is built on the innovative Asymmetric Diffusion Transformer (AsymmDiT) architecture. With 10 billion parameters, it’s currently the largest openly released video generative model, pushing the boundaries of AI-generated video capabilities.

Efficient Video Compression

The model incorporates AsymmVAE for efficient video compression, reducing video size by 128 times. This compression allows for more efficient storage and processing of generated videos without compromising quality.

Hardware Requirements and Current Limitations

Initial release required substantial computing power, specifically at least 4 H100 GPUs (!). Currently, Mochi 1 generates videos at 480p resolution, with potential warping in cases of extreme motion. It’s optimized for photorealistic styles, which may limit its versatility for certain creative applications. The model and weights can be downloaded from https://github.com/genmoai/models .

Community Developments and Increasing Accessibility

The open-source nature of Mochi 1 has spurred rapid community development. Notably, the first quantizations have emerged, enabling the model to run on consumer-grade hardware with less than 20GB VRAM. This development significantly broadens the potential user base for Mochi 1 (https://github.com/victorchall/genmoai-smol )

Rapid Rise in AI Video Rankings

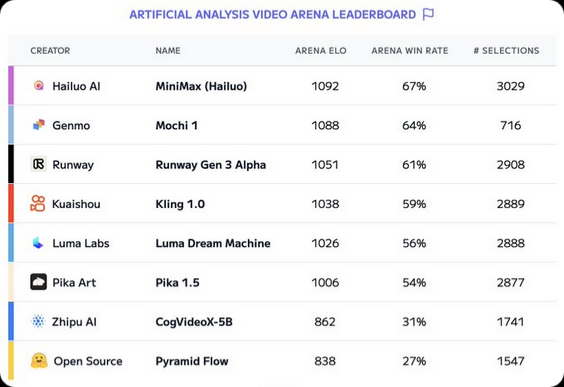

Within days of its release, Mochi 1 has made a significant impact in the AI video generation field. It has quickly climbed to second place on the Artificial Analysis Video Arena Leaderboard, achieving an impressive ELO score of 1088. This puts it just 4 points behind the leader, Hailuo AI Minimax (1092), and a substantial 37 points ahead of the third-place Runway Gen 3 Alpha (1051). This rapid ascent underscores Mochi 1’s exceptional performance and potential in the field of AI-generated video.

Open-Source Impact and Responsibilities

Mochi 1’s open-source nature offers transparency and collaborative improvement opportunities, but also raises concerns about potential misuse. Unlike commercial models with built-in safeguards, Mochi 1 relies on community vigilance to prevent harmful applications.

The open-source community can leverage this transparency to:

- Develop detection algorithms for deepfakes

- Implement watermarking techniques

- Rapidly deploy ethical guidelines and safeguards

Mochi 1’s accessibility may spark innovations in underserved areas and niche industries, potentially leading to more diverse AI applications than closed systems typically produce.

A New Era of Creative Possibilities

Mochi 1 represents a significant advancement in AI-generated video technology. Its open-source nature, combined with its impressive capabilities, opens up new possibilities for creators, researchers, and enthusiasts. As the technology continues to evolve and become more accessible, we can anticipate innovative applications across various industries, from entertainment and education to marketing and beyond. We can’t wait to see what the AI community will do with it.

The release of Mochi 1 is not just a technological achievement; it’s a catalyst for a new era of creative expression and visual storytelling. As users begin to explore its potential, we stand on the brink of a revolution in how we create, consume, and interact with video content.

Sources: