The Paradox of GPT-4o: Faster Yet Dumber!

The November 2024 release of OpenAI’s GPT-4o model shows significant changes from its August predecessor. Key findings include a notable performance regression across multiple benchmarks, with scores now comparable to the smaller GPT-4o-mini model. The new release also demonstrates a substantial increase in output speed. These observations suggest that the November release may be a smaller model than its August counterpart. Despite these changes, OpenAI has maintained the same pricing structure. Developers are advised to exercise caution when considering adopting the new version, with emphasis on thorough testing before transitioning workloads.

Introduction

GPT-4o, initially released in May 2024, was designed as an upgrade to surpass existing GPT-3.5 and GPT-4 models. It was intended for advanced applications such as real-time translation and conversational AI, offering state-of-the-art benchmark results in voice, multilingual, and vision tasks according to OpenAI. However, recent evaluations of the November 2024 release have revealed unexpected changes in the model’s performance and capabilities.

Performance Regression

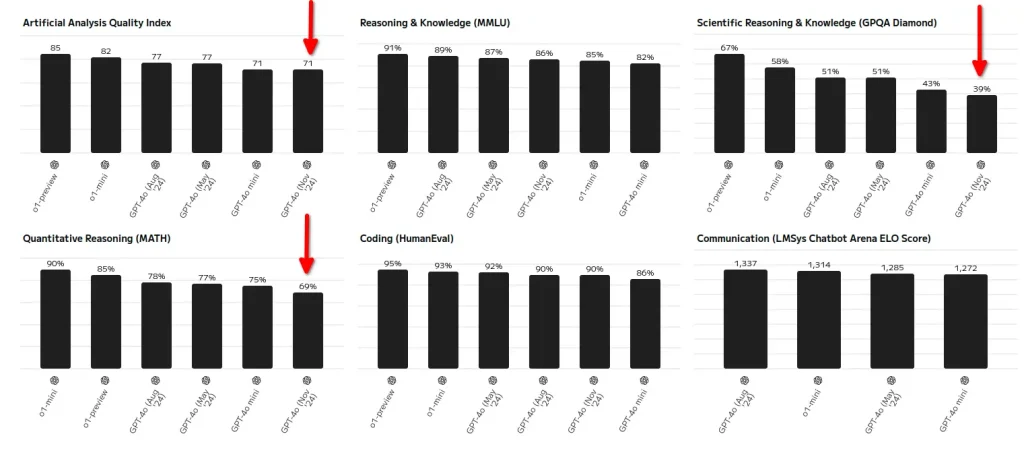

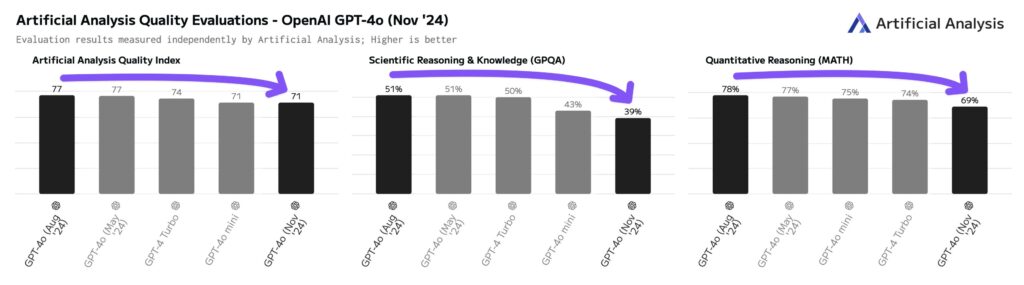

The November release of GPT-4o has shown a significant decline in performance compared to the August release ¹. This regression was reported less than 24 hours after OpenAI announced an upgrade, claiming improved creative writing, file handling, and response quality. However, independent evaluations have consistently measured lower scores across multiple benchmarks:

- The Artificial Analysis Quality Index dropped from 77 to 71, now equal to GPT-4o mini.

- The GPQA Diamond score decreased from 51 to 39.

- The MATH score decreased from 78 to 69.

These decreases put the state-of-the-art model’s capabilities on par with the smaller GPT-4o-mini model, raising concerns about the efficacy of the latest update.

While some users have reported improvements in creative writing and response quality, the model appears to have been “nerfed” in terms of logical reasoning and complex problem-solving abilities. This discrepancy has led to speculation within the AI community that OpenAI might be splitting applications for their model series: the GPT-4o series optimized for creative writing and fluency without the ability to reason but with fast responses, and the o1 series focused on reasoning, mathematical tasks, and general STEM queries at the expense of response time and at a much higher price point. However, this remains unconfirmed by OpenAI.

Despite the quantitative regression observed in several benchmarks, the November GPT-4o release has shown surprisingly strong performance on the LMArena benchmarks ³. It currently holds second place, trailing only slightly behind Google’s Gemini-Exp-1121 model. This discrepancy has led to speculation within the AI community that the model may have been specifically optimized to excel in these particular benchmarks, potentially at the expense of performance in other areas. Such a strategy, if true, raises questions about the true value and reliability of these benchmarks as indicators of overall model capability and performance.

Speed Increase

In contrast to the performance regression, the November GPT-4o model has demonstrated a significant increase in output speed. Researchers observed:

- An increase from approximately 80 tokens/s to about 180 tokens/s.

- This doubling in speed is unusual, as researchers have not previously observed such a substantial difference in launch-day speeds for OpenAI models.

While faster speeds are typically seen on launch days due to OpenAI provisioning capacity ahead of adoption, this 2x speed difference is unprecedented.

Model Size Hypothesis

Based on the performance regression and speed increase, researchers have concluded that the November release of GPT-4o is likely a smaller model than the August version. This hypothesis is supported by two main observations:

- The performance regression across multiple benchmarks suggests a reduction in model capabilities.

- The significant increase in output speed is consistent with the behavior of smaller models, which can typically run faster on the same hardware.

However, it’s important to note that other techniques, such as advancements in speculative decoding, could also contribute to the speed increase. Further investigation may be necessary to confirm this hypothesis conclusively.

Pricing and Recommendations

Despite the potential decrease in model size and capabilities, OpenAI has not changed the pricing for the November GPT-4o release. This has led to speculation within the AI community that OpenAI may be attempting to increase revenues by offering a potentially less capable model at the same price point, without being fully transparent about the changes. Some critics argue that this lack of transparency could erode trust between AI providers and their users, potentially setting a concerning precedent for the industry. However, it’s important to note that these are speculations, and OpenAI has not officially commented on these allegations.

Given this situation, researchers and analysts have made the following recommendations:

- Developers should not shift workloads to the new version without careful testing.

- It is advised to continue using the August version until thorough evaluations of the November release have been conducted.

As stated by Artificial Analysis, “Given that OpenAI has not cut prices for the Nov 20th version, we recommend that developers do not shift workloads away from the August version without careful testing.” ¹

Conclusion

The November 2024 release of GPT-4o presents a worrying situation: a significant performance regression coupled with a substantial increase in output speed. This unexpected combination has led researchers to hypothesize that the new release may be a smaller model than its predecessor. While the increased speed could be beneficial for certain applications, the decrease in performance across multiple benchmarks raises concerns about the overall capabilities of the updated model.

The lack of price adjustment from OpenAI, despite these changes, further complicates the situation for developers and users of the GPT-4o model. As the AI community continues to evaluate and understand these changes, it is crucial for developers to approach the new release with caution, conducting thorough testing before transitioning any critical workloads.

This situation highlights the dynamic nature of AI model development and the importance of continuous, independent evaluation of new releases. It also underscores the need for transparency in AI development, particularly when changes may significantly impact model performance and capabilities.

Sources: